Do We Still Need to Teach Kids to Code?

The short answer? Absolutely. But not for the reasons you might think.

With AI tools like Cursor, Open Codex, and Claude Code that let you write code in seconds, some wonder whether coding education has become obsolete. The evidence from 2024-2025 tells a different story: coding literacy matters more than ever, though what we're really teaching isn't just syntax — it's computational thinking, problem-solving, and AI-capable digital citizenship.

🤖 Vibe Coding: The Reality Check

"Vibe coding" became Collins Dictionary's Word of the Year 2025—the practice of accepting AI-generated code without critically reviewing it. Platforms like Replit, Cursor, and v0.dev promise to turn natural language into working applications. The productivity gains look impressive: Google reports 25% of their code is AI-assisted, and Amazon and Microsoft see similar patterns.

But here's what the headlines miss: a rigorous spring 2025 study found that using state-of-the-art AI tools resulted in a 19% decrease in productivity on complex projects. Developers spent significant time prompting the AI and reviewing outputs that were wrong 61% of the time. Code quality suffered: 47% more lines per task, 4x more code duplication, and 68% more time resolving security vulnerabilities.

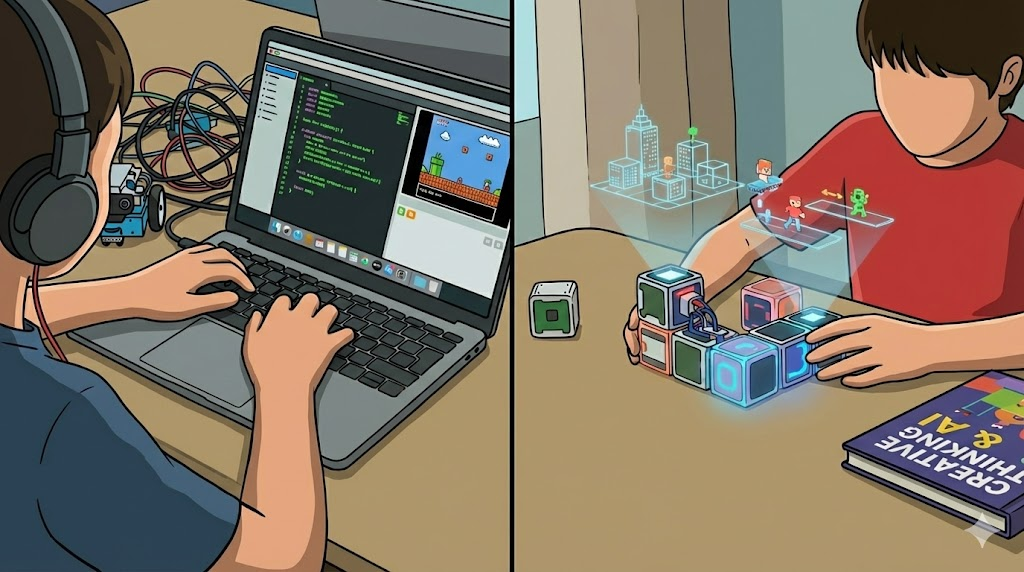

This is cognitive offloading in action. Students we work with treat AI as "Google that writes for them"—the code is good because the computer said so. This superficial engagement sits at the wrong end of the learning spectrum. The students who thrive? Those who use AI as an assistant, a guide, not a replacement for thinking.

We spend more time and effort promoting and re-promoting the AI than getting a high quality output, the key is to know if what its telling you is valid.

🎨 Where AI Actually Helps (and Where It Doesn't)

Here's the nuance: AI tools excel at specific, well-defined tasks while struggling with complex systems.

Take UI/UX work. When building this site you're reading now, we used AI heavily with Flowbite—a UI library that provides llm.txt files so LLMs can understand the entire component system. Being able to hand AI a CSS library and have it generate responsive designs? That's genuinely powerful. AI can fix padding issues in 30 minutes that might take hours manually (and me, with my hate of UI coding days).

But AI struggles profoundly with system architecture, cross-service debugging, security-critical applications, and understanding business context. It excels at boilerplate but falters on production-grade code. This creates an essential teaching moment: students need to understand what AI does well and what requires human expertise.

🧠 Coding Is Problem-Solving, Not Syntax

The most compelling argument for coding education has never been about memorising syntax—it's about developing computational thinking that transfers across all domains.

When students debug code, they're learning something irreplaceable: persistence through failure, systematic troubleshooting, and treating errors as learning opportunities. A 2024 meta-analysis found coding enhances executive functions in children—response inhibition, working memory, cognitive flexibility, planning abilities, and problem-solving skills.

Debugging teaches the art of productive failure. As educational research emphasises, fixing bugs provides a low-stakes environment where students practice problem-solving and emotional regulation. These cognitive skills—decomposing problems, recognising patterns, thinking abstractly, persisting through failure—are precisely what humans still do better than AI when facing truly novel challenges.

In our previous article on why maths matters, we discussed how rigorous study builds cognitive muscle. Coding works the same way. A complex algorithm isn't just loops and variables; it's a heavy cognitive lift for logical reasoning. The process of understanding problems, devising strategies, implementing solutions, and refining them builds methodical thinking that becomes second nature.

On a personal note, the feeling of fixing a bug in code after hours of debugging can be both frustrating (realising it was incorrect spelling) and exhilarating (finding that random bug in someone else code)

🤔 The AI Gap: Impressive Yet Limited

Understanding current AI capabilities helps calibrate what human skills remain essential. As of November 2025, Google's Gemini 3 and Claude Sonnet 4.5 represent state-of-the-art reasoning.

Gemini 3 achieves 95-100% on advanced maths competitions and 91.9% on PhD-level scientific knowledge. Claude Sonnet 4.5 dominates coding benchmarks with 77.2% on real-world software engineering tasks. These advances are genuine and significant.

Yet fundamental limitations persist. The ARC-AGI-2 benchmark, designed to test novel problem-solving that cannot be memorised, exposes the gap: humans achieve 60-100% accuracy while most AI models score below 5%. Apple's June 2025 research revealed that reasoning models undergo "complete accuracy collapse" when faced with highly complex tasks.

AI still falls short in symbolic interpretation, applying multiple interacting rules, performing actual causal reasoning, demonstrating genuine common sense, maintaining long-term consistency, providing explainable reasoning, and exhibiting authentic creativity. As the World Economic Forum put it: "AI is an augmenter, but not a replacement."

This means mathematics and critical thinking—the cognitive foundations underlying coding—remain areas where human capabilities are essential. Students who develop these thinking skills will work effectively with AI tools while providing the creative problem-solving and novel thinking that AI cannot replicate.

💻 Digital Literacy as a Fundamental Right

Coding literacy isn't optional anymore—it's foundational for meaningful participation in 21st-century society.

The 2024 State of Computer Science Education report shows that 11 states now require computer science for high school graduation, and 60% of U.S. public high schools offer foundational courses. Code.org's CEO Hadi Partovi says: "Computer science teaches problem-solving, data literacy, ethical decision-making and how to design complex systems. It empowers students not just to use technology but to understand and shape it."

The goal isn't creating a generation of professional programmers—it's ensuring every citizen has the foundational understanding to participate as creators rather than passive consumers. When we acquired language, we learned to speak, not just listen. When we acquired text, we learned to write, not just read. Now with computers, we must learn to program them, not just use them.

📝 Side Note: Teaching Markdown Matters

One overlooked component of digital literacy: teaching students to write for digital platforms. Markdown represents a better on-ramp than Word for several reasons.

Traditional word processors were designed to produce paper, created before the vibrant internet era of sharing. Markdown forces you to write for the web—and that's where our world lives now. It's dead simple to learn (we should be teaching it!), works seamlessly with version control systems like Git, and serves as a bridge between writing and coding.

Real-world relevance matters: platforms like GitHub rely on Markdown for documentation, LLMs output in Markdown format, and it's used across professional technical communication. When students write in Markdown, they think structurally—what's a heading versus emphasis versus a list—rather than fiddling with fonts and margins. This semantic approach teaches clearer thinking.

Plus, Markdown is plain text—a file from 2004 opens perfectly today and will in 2064. It's future-proofed in a way proprietary formats never will be.

🚀 What Students Actually Need

The students who will thrive develop:

Computational thinking foundations—decomposing problems, recognising patterns, thinking abstractly, designing algorithms. These transfer to every field.

Debugging as a mindset—persistence through failure and systematic troubleshooting matter far beyond programming.

Understanding when to use AI—knowing what tasks AI handles well (boilerplate, common patterns) versus what requires human judgment (architecture, security, novel problems). This metacognitive skill becomes the differentiator.

Digital citizenship—the ability to understand how technology works conceptually, evaluate information critically, and participate as creators.

As AI researcher Andrew Ng emphasised: "As these tools continue to make coding easier, this is the best time yet to learn to code." Why? Understanding fundamentals enables you to leverage AI effectively, evaluate its output critically, and maintain problem-solving skills that AI cannot replicate.

The path forward is clear: teach kids to code not for syntax memorisation, but for computational thinking, problem-solving resilience, and digital literacy. Embrace AI tools as learning accelerators, not replacements for foundational understanding. And emphasise that in a world of increasing automation, the most valuable human capabilities are precisely those AI cannot replicate—creativity, ethical reasoning, novel problem-solving, and the ability to ask the right questions.

The goal isn't producing software engineers. It's ensuring every student has the computational literacy to shape technology rather than be shaped by it—to be empowered creators in a digital world, not just passive consumers.

References

- Not So Fast: AI Coding Tools Can Actually Reduce Productivity - METR study on AI coding productivity

- The Rise of Vibe Coding in 2025 - Medium

- Vibe coding - Wikipedia

- The cognitive effects of computational thinking: A systematic review - ScienceDirect (2024)

- Coding and Computational Thinking Across the Curriculum - Review of Educational Research (2025)

- Gemini 3: Introducing the latest Gemini AI model - Google Blog

- Introducing Claude Sonnet 4.5 - Anthropic

- ARC-AGI 2: A New Challenge for Frontier AI - ARC Prize

- 2024 State of Computer Science Education - Code.org

- Word versus Markdown: more than mere semantics - Ben Balter

- Why scholars should write in Markdown - Stuart M. Shieber, Harvard